Resource Optimization Guardrails powered by Catalog Maps PATENT PENDING

Flexible Guardrails Reduce Risk and Drive Efficiency

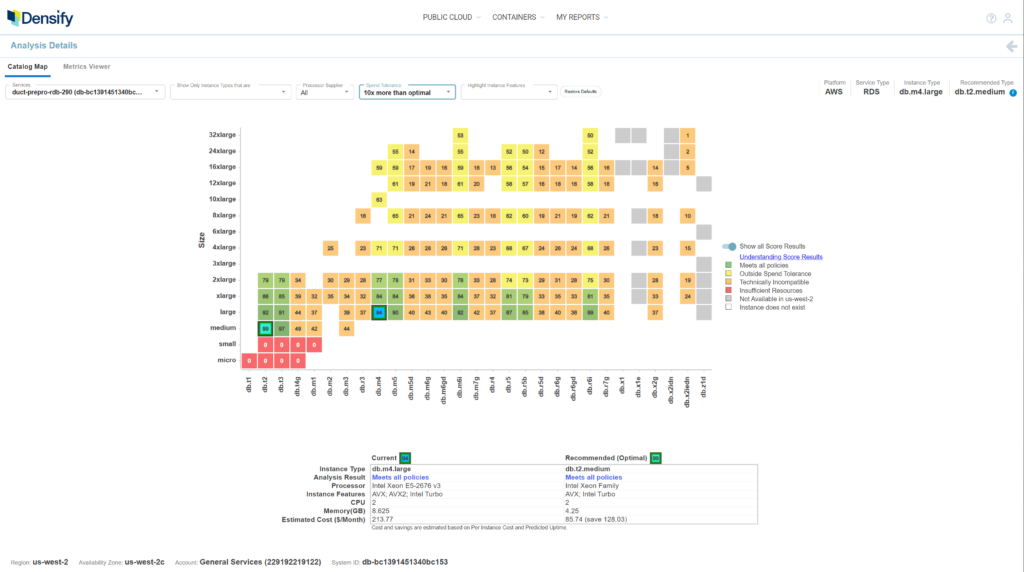

Guardrails enable FinOps teams to simply remove potential poor instance choices from the available cloud catalog. Choices that are sub optimal for performance or excessively wasteful are just not available, leaving a selection of only the best choices. All tunable with respect to a cost overage tolerance.

Machine learning analyzes each workload against the entire cloud catalog to establish guardrails for every workload. Guardrails encourage good decisions while discouraging the selection of instances that should be avoided by quickly showing a developer or app owner instances that are:

Too small for a workload to perform well

Not a technical fit due to requirements such as GPUs, local disks, or the right amount of network interfaces

Too costly based on tunable ‘spend tolerance’ that scores the cost of each instance against the most cost effective option

Good candidates for hosting your workload as they meet all performance, technical, and financial constraints

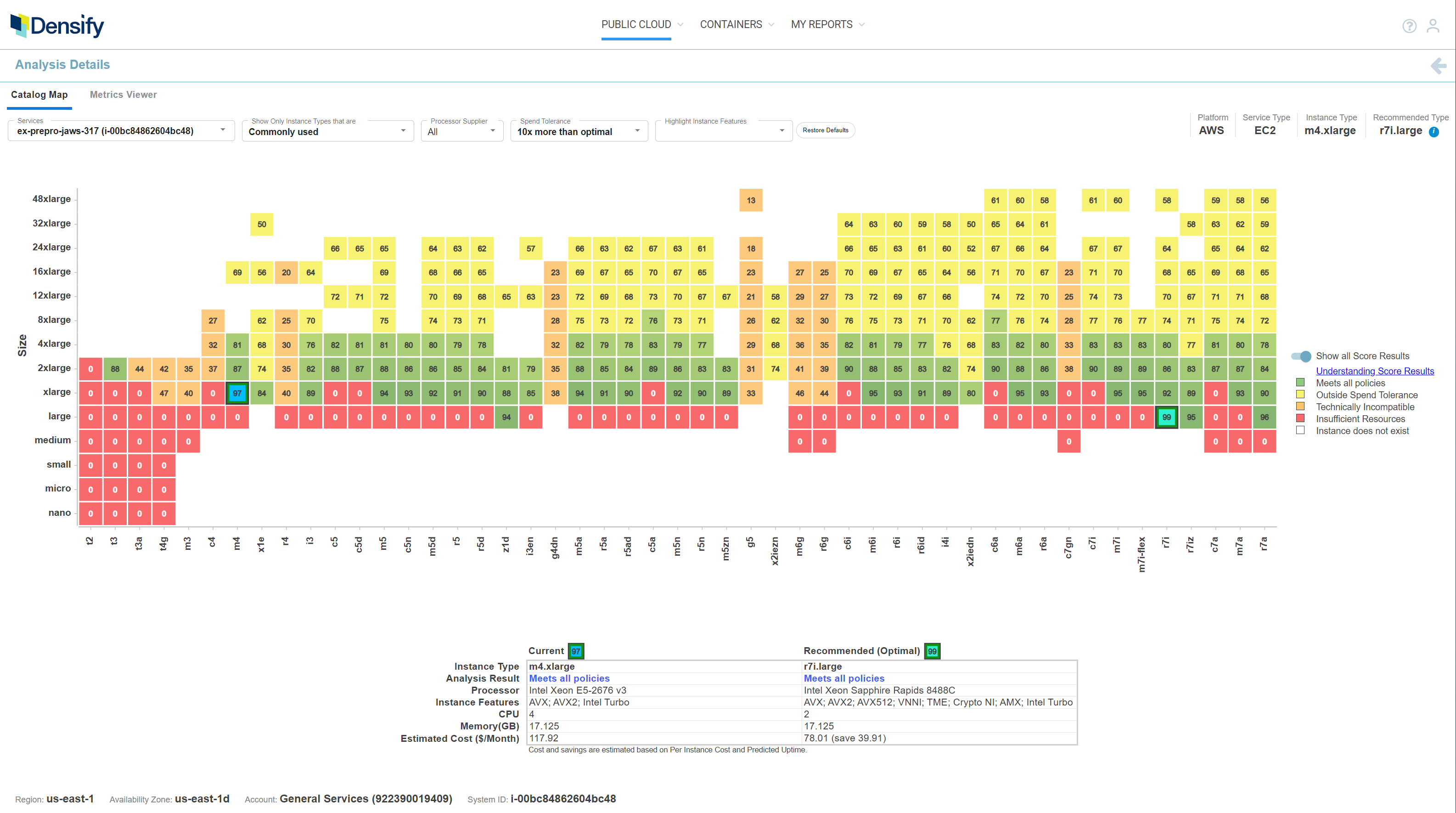

filtered to show only commonly used instance types

Empowering Engineers

Avoid having to hassle Engineers about resource selections, freeing them to focus on innovating:

- Give them the freedom to select from multiple instance types that meet all performance and technical requirements, while staying within FinOps financial requirements

- Minimize interruptions by only notifying them when they are operating outside of agreed upon guidelines

- Avoiding unwanted issues that could be caused by deploying software on technically incompatible or underperforming instance types

(Catalog Map filtered to only show commonly used instance types)

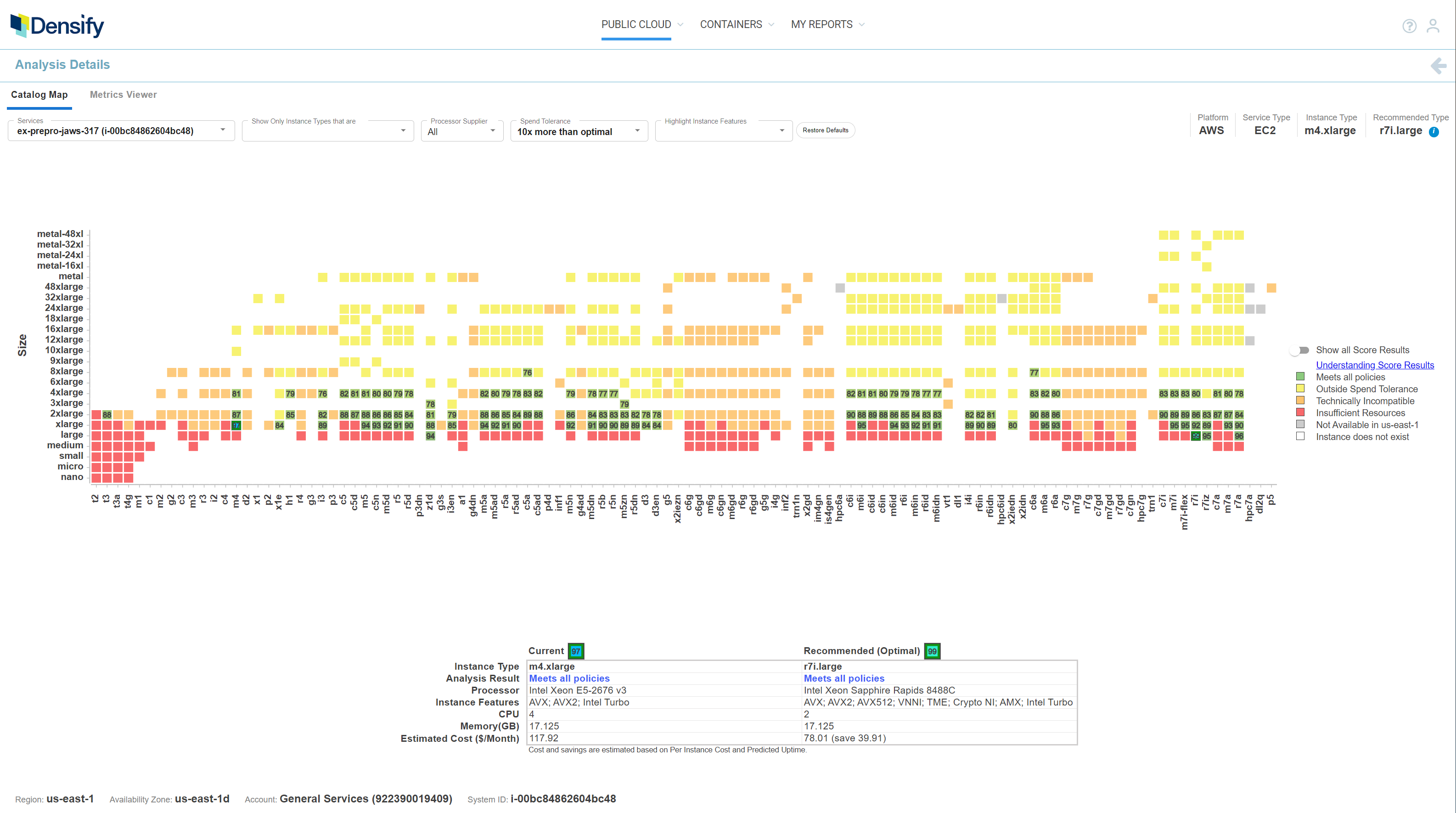

Empowering FinOps

Increase the cost efficiency of your cloud infrastructure by:

- Implementing guardrails, developed in partnership with Engineers, to ensure that financial efficiency is achieved without inhibiting technical innovation

- Building trust by helping Engineers to avoid problems caused by performance issues or using technically incompatible instance types

- Introducing a ‘Spend Tolerance’ guardrail that highlights when an instance being deployed costs more than an agreed upon multiple of the optimal instance cost

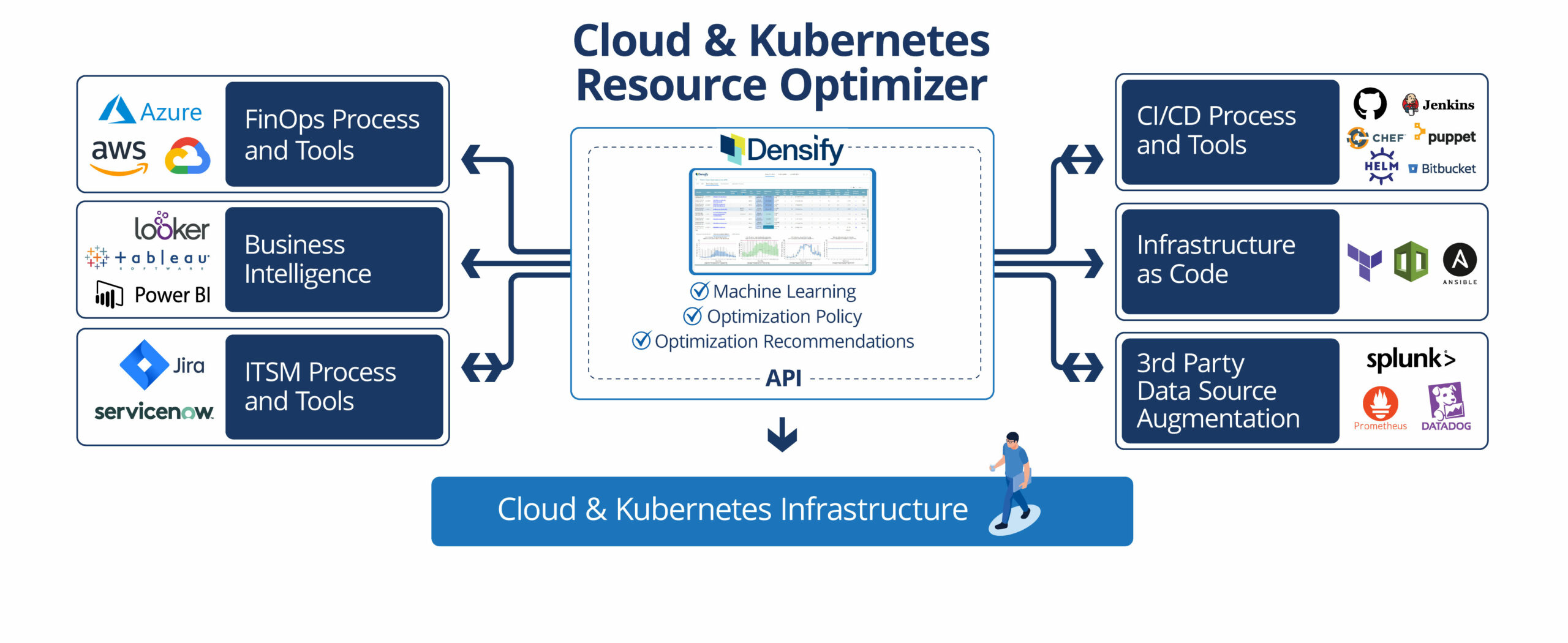

Seamless Integration with Policy Frameworks

Densify integrates with your existing policy frameworks, such as Hashicorp Sentinel, Azure Policy, and AWS Config to ensure that deployed workloads comply with your organization’s resource requirement guidelines.

- Take advantage of policy frameworks’ ability to automate notifications, or block deployments, when an instance type is outside the agreed to guidelines for a given workload

- Codify resource guardrails by taking advantage of policy rules that integrate with Densify’s APIs

- Extend existing frameworks already used for managing security and other compliance use cases, to easily introduce resource-based guardrails